The challenge of running Verba RAG and Ollama locally on Windows.

How to set it up.

This short post may help if you are getting errors when trying to run both Ollama and weaviate Verba's RAG framework on a local Windows machine.

Context

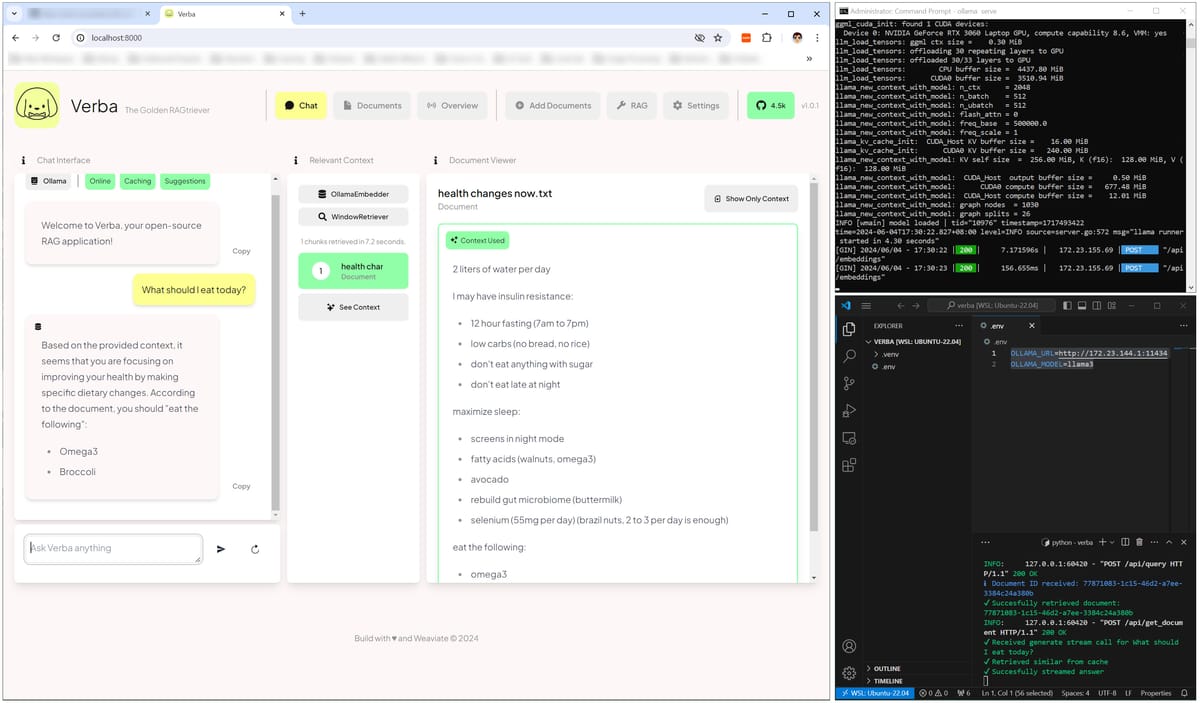

When trying to run Ollama as a service on my Windows 10 laptop, along with Verba RAG on a virtual environment on the same machine using a WSL virtual environment, I was getting errors when uploading documents. This short post may help you set it up.

I run into two obstacles:

Obstacle 1: WSL is not seeing the localhost

When you set the .env file to:

OLLAMA_URL=http://127.0.0.1:11434 (or localhost:11434)

OLLAMA_MODEL=llama3

the Verba app will start, but it will not connect to Ollama when uploading documents.

The problem is that WSL is not seeing your localhost because it uses a different IP address.

To determine what the IP address your WSL is seeing on your local PC, open your WSL terminal and run:

ip route show | grep -i default | awk '{ print $3}'

This will give you the IP address of your localhost from the WSL point on view. In my case it was:

172.23.144.1 (your IP may be different)

Therefore, the .env variables must be set as:

OLLAMA_URL=http://172.23.144.1:11434

OLLAMA_MODEL=llama3

Obstacle 2: Ollama is not listening on all local interfaces

You have now only solved part of the issue. Now you need to run your local Ollama in a different way, allowing it to listen to all local interfaces.

Close your Ollama service (find the little llama icon in the taskbar, right click, quit).

Open a CMD terminal in admin mode, and type the following:

set OLLAMA_HOST=0.0.0.0

ollama serve

Now you will have Ollama running on that terminal and will see what's going on.

You Verba app will now connect to it.

Misc

If you want to make the host permanent so you don't have to set it every time, run:

setx OLLAMA_HOST "0.0.0.0" /M

I hope this helps!